ttslearn.dnntts¶

DNN音声合成のためのモジュールです。

TTS¶

The TTS functionality is accessible from ttslearn.dnntts.*

-

class

ttslearn.dnntts.tts.DNNTTS(model_dir=None, device='cpu')[source]¶ DNN-based text-to-speech

- Parameters

Examples:

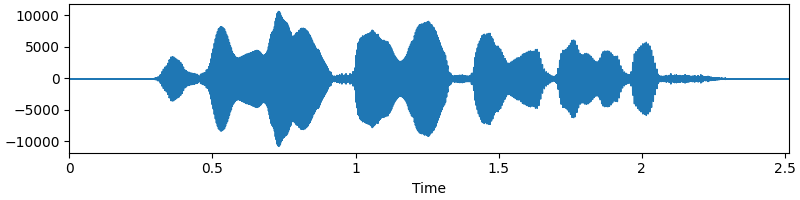

from ttslearn.dnntts import DNNTTS import matplotlib.pyplot as plt engine = DNNTTS() wav, sr = engine.tts("日本語音声合成のデモです。") fig, ax = plt.subplots(figsize=(8,2)) librosa.display.waveplot(wav.astype(np.float32), sr, ax=ax)

Models¶

The following models are acceible from ttslearn.dnntts.*

Feed-forward DNN¶

Multi-stream functionality¶

Split streams from multi-stream features |

|

Split streams and do apply MLPG if stream has dynamic features |

|

Get windows for parameter generation |

|

Get static sizes for each feature stream |

|

Get static features from static+dynamic features |

Generation utility¶

Predict phoneme durations. |

|

Predict acoustic features. |

|

Generate waveform from acoustic features. |